If you have been playing with Forcepoint™ firewalls, you know that traffic logs can be browsed and searched through their central management console (SMC). You’ve probably noticed that searching for old records can sometimes be very slow, even with simple filters like source or destination IP, port, etc.

Computing aggregations or displaying tops n with the SMC is not really something you can do on the fly. I believe it was just not designed to do that, at least for now. This is due to the way traffic logs are stored on the SMC, they are only indexed by year/month/day/hour through a filesystem tree, so when you add filters to your searches, every single file matching your time period criteria has to be read. This is extremely frustrating when you want to cleanup old firewall policies and need to check what sources or destinations have matched a rule.

Here at Zenetys it’s been a while we have been working with Elasticsearch, and naturally we started to think about indexing Forcepoint™ firewall logs in it.

Forwarding logs from the SMC

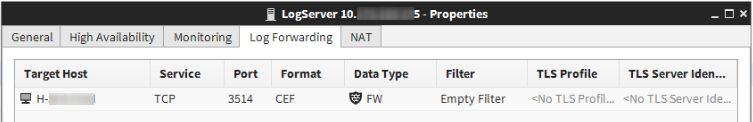

On the SMC, go to Network Elements, right click on the object corresponding to the Log Server, open the Properties window and go to the Log Forwarding tab. From there, you can define several forwarding rules for logs, each may have different options, in particular the target, the format or a filter on the events to send (just like when browsing logs).

Unfortunately there is no JSON format available, it would have been too easy. However there is CEF, that’s the one we’ve chosen because it is readable (mostly key/value), includes by default the fields interesting for major use cases, and is pretty common, thus can be parsed by many logging tools.

We chose TCP over UDP to get ACKs in transport, allowing short disconnects with the target. The logs are sent as \n delimited plain text lines, with a syslog PRI prefix in front of each record. Following is an example of what’s sent, with lines truncated to 100 bytes:

[zuser@log6 ~]$ sudo ngrep -d bond0 -W byline -n 2 -S 100 port 3514 interface: bond0 (10.1.2.0/255.255.255.0) filter: ( port 3514 ) and (ip or ip6) # T 10.4.5.6:36760 -> 10.1.2.3:3514 [AP] <6>CEF:0|FORCEPOINT|Firewall|6.5.8|70018|Connection_Allowed|0|cat=System Situations app=Squid HTTP p # T 10.4.5.6:36760 -> 10.1.2.3:3514 [AP] <6>CEF:0|FORCEPOINT|Firewall|6.5.8|70021|Connection_Closed|0|in=9084 out=987 app=Squid HTTP proxy (3 exit 4 received, 0 dropped

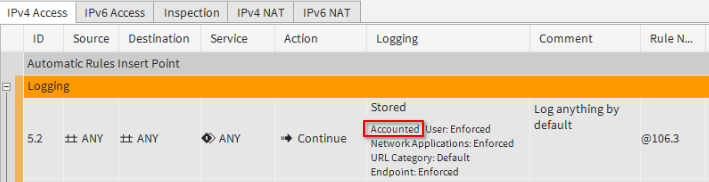

Note : in order to get in/out bytes on connection closed or progress events, accounting information must be logged. We usually do it with a continue rule on top of the policy; the option Connection Closing: Log Accounting Information must be set:

Parsing to JSON for Elasticsearch indexing

We normally use RSyslog to receive logs at a central point because it scales well. When it comes to parsing, RSyslog generally fits for simple cases and it has an output module for Elasticsearch. In the context of this experiment, we reused an old server, with an old version of RSyslog (not updatable). Unfortunately this build of RSyslog did not include the required modules; we also know that the CEF decoder in liblognorm current stable version do not parse well the first extension key name of Forcepoint™ logs (see Github PR#331).

Since we’ve always tried to avoid using Logstash — because RAM may be cheap, but come on! —, we decided to see what could come up of experimenting with Filebeat.

It is light, has a configurable TCP input, several processors for dissecting and manipulating data, including a CEF parser. Above all it has decent scripting capabilities for complex cases through an embedded Javascript interpreter. This is something RSyslog is really really really missing IMHO.

In the end, the only difficulty was to setup a proper timestamp field, because of some limitations with the date format used in Forcepoint™ logs:

- the date is in the Log Server’s local time but it does not include timezone information;

- it has only precision to the second;

- by default the date in the log is the reception time on the Log Server, not the creation time of the event as reported by the firewall.

The precision issue is logged at Forcepoint™ as a feature request. The timestamp reported by the firewall can be added to the list of CEF fields with SMC > 6.5.2. We managed to workaround the timezone issue in Filebeat by forcing it, the method is absolutely not ideal and it was not straight forward (see comments in filebeat.yml). However, this is were it’s important to have decent scripting capabilities when manipulating logs, to be able to quickly switch to plan B and not get stuck.

Filebeat configuration:

⇨ /etc/filebeat/filebeat.yml

⇨ /etc/filebeat/scripts/event-created.js

⇨ /etc/filebeat/scripts/iana-proto-num2name.js

⇨ /etc/filebeat/scripts/set-cef-rt-to-tmp-timestamp.js

⇨ /etc/filebeat/scripts/set-index-rj.js

Elasticsearch indexing

The indexes holding the data were named ecs-forcepoint-firewall-*, but we should have swapped the firewall and forcepoint terms to be able to create a vendor-independent ecs-firewall-* index pattern in Kibana. In order to get a proper mapping, the following templates were applied to these indexes:

name index_patterns order version ecs [ecs-*] 1 ecs-forcepoint-firewall [ecs-forcepoint-firewall-*] 500 ecs-forcepoint-firewall-rj [ecs-forcepoint-firewall-rj-*] 700

The base template is a snapshot from the ECS project, it defines all the fields standardized in the Elastic Common Schema. Here we’ve added a settings.index.default_pipeline: ecs property, but we should have kept it vanilla and added another base template matching ecs-*.

The second template defines the non-standard forcepoint.* fields we’ve added because they have no ECS equivalent. We also extend a few ECS fields to index them both as keyword and text, or to set a custom analyzer on them. This is also were we set the shards, replicas and refresh interval for the indexes. The third template is only tuning for indexes created when reinjecting manually old logs exported from the SMC.

Filebeat did not offer a processor for geocoding of IP addresses against MaxMind databases, so we did that part on Elasticsearch side with a pipeline at ingest time. This is also were we drop the internal _ field we needed in Filebeat (see notes in filebeat.yml).

Elasticsearch configuration:

⇨ template ecs

⇨ template ecs-forcepoint-firewall

⇨ template ecs-forcepoint-firewall-rj

⇨ pipeline ecs

Et voilà!

We are now able to search and aggregate way faster than from the SMC. Here is a sample document to give an idea of the result.

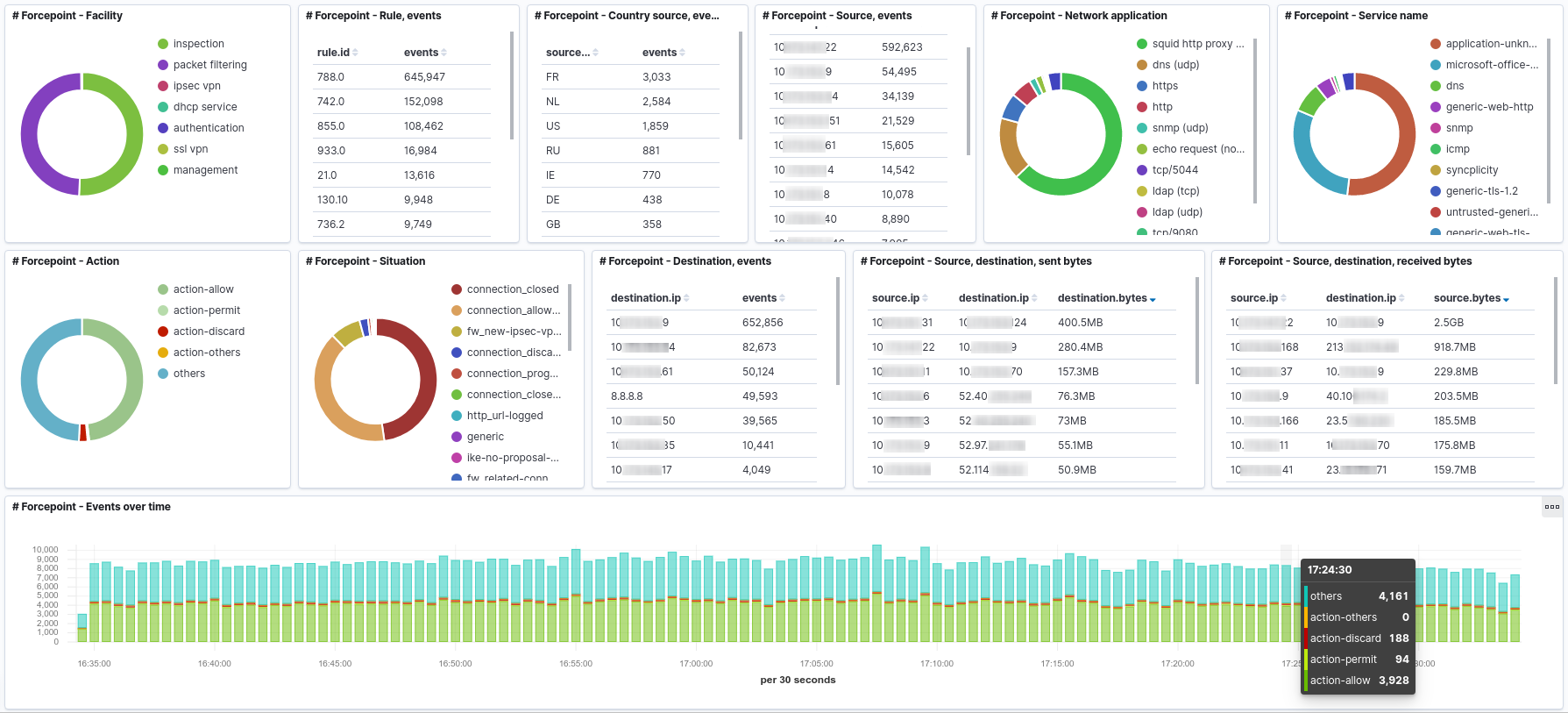

Although the original purpose of this experiment was to ease cleaning of firewall rules, it is now possible to create Kibana dashboards. Here is an example:

Greetings

Elasticsearch, https://www.elastic.co/elasticsearch/

ECS fields reference, https://www.elastic.co/guide/en/ecs/current/ecs-field-reference.html

Filebeat, https://www.elastic.co/beats/filebeat

RSyslog, https://www.rsyslog.com/

Forcepoint™ NGFW, https://www.forcepoint.com/product/ngfw-next-generation-firewall